Well, my mind hasn't changed: AI won't be coming for librarian jobs any time soon. Until someone builds and AI that is focused on data and research--instead of a language model like ChatGPT--replacing us is a laughable concept.

Some of my fellow PTRC representatives have been experimenting with ChatGPT since I last posted about my initial adventures in February. For those who may not recall, I found that the language model AI was unable to perform any sort of patent or trademark search, or provide specific data on patents or trademarks.

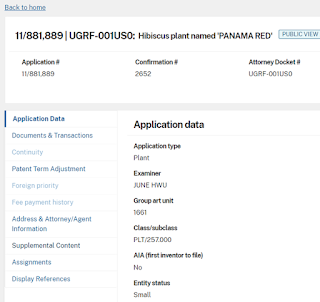

That has not changed over the past few months' updates. I tested again by asking for a specific patent number applied to the patented plant featured in my last post (Hibiscus 'Panama Red'). Again, ChatGPT was unable to give me a patent number.

|

| ChatGPT still can't provide patent numbers |

I decided to try the approach made by my fellow rep mentioned above, Stella Mittelbach of the Los Angeles Public Library Science, Technology & Patents Department. She requested Cooperative Patent Classification (CPC) numbers that may be applied to an invention, and was provided with an extensive list of potential matches. I was curious about the quality of CPC numbers ChatGPT could provide.

In recent publications and casual conversations, I've learned that ChatGPT struggles to provide concrete data and references, sometimes producing "hallucinations", which is made-up information presented as factual. Furthermore, searching CPC numbers can be challenging, and narrowing down the candidates to a category that most closely matches an invention is comparable to the initial stages of a patent search. It surprised me that ChatGPT was capable of returning such specific information.

Thanks to teaching courses on searching for CPC numbers and using them to search for patents, I have become extremely familiar with a few groups and subgroups. I decided to ask ChatGPT to suggest CPC numbers for the hypothetical invention I typically use: a new contact lens material that makes it safe to sleep in them, that is an improved silicone hydrogel with higher oxygen permeability. I know two potential CPC numbers that could be applied to this invention, just based on that description.

|

| These numbers are so wrong, don't bother looking too closely. |

ChatGPT was off-base. It supplied numbers that were mostly unrelated, yet has some aspects that used similar terminology. I followed up with asking for the definition of the CPC number G02C 7/001, which I knew was for bifocal contact lenses. Again, ChatGPT failed to give accurate information.

|

| It's also wrong |

I corrected the AI.

|

| My correction spurred it to give some better information |

|

| Second correction, with a suggestion |

Then I asked where it was obtaining its information. It vaguely cited "general knowledge", which means nothing. CPC numbers are something I would classify as "specific knowledge", rarely known to any extent by the vast majority of the populace. I'd wager most people are unaware the CPC exists.

|

| ChatGPT seems unsure of its information sources |

Since the AI is supposed to learn from interactions, I wanted to test if my corrections were somehow absorbed. I emailed PTRC colleagues a copy of the transcript, and requested someone else attempt my questions, and share the answers given.

Dave Bloom, Science and Engineering/PTRC Librarian of University of Wisconsin - Madison tried my initial prompts verbatim using multiple ChatGPT accounts and one for Google Bard, a few times each. He found each response was "noticeably different, not necessarily informed by the other answers, and, most importantly, not reliable."

|

| A sample of Dave Bloom's interactions |

Similar to Dave Bloom, I got multiple answers. When I submitted a correction, I was able to choose a "better" answer, but this is not an option that someone actually trying to find a CPC number using ChatGPT would have. Furthermore, the better answer is based on the specific feedback I provided. A different account might receive the wrong information, and not be able to correct it. OpenAI should program it to stick with an instructional response, which is never wrong.

|

| The next day |

|

| Before I made a suggestion through the system |

|

| After my suggestion through the system |

|

| On the second day... |

|

| the answers seemed slightly improved... |

|

| but are still inaccurate. |

Dave Bloom later reminded me that when using a large language model (LLM) like ChatGPT, "it's tempting to evaluate each

individual response on the basis of accuracy. [...] It’s not about whether any individual answer is right or wrong or

whether ChatGPT or Bard is better at generating “correct” answers, but

that, even with the exact same prompt,

LLMs do not produce the same results. Replicability is a baseline

expectation of credible scientific research, and we should apply it to

search tools, too." They don't provide reliable source responses, because their function is to produce answers that appear to be written by humans. Dave explained that this means the fake citations and URLs are therefore often very convincing.

So, even when ChatGPT can provide an answer, they're at high risk of being very bad answers. Librarians have dodged the bullet again!

|

|

I wasn't worried about you, buddy; my concern is decision makers who like to save money |